Are you looking for smarter insights delivered straight to your inbox? Subscribe to our weekly newsletters for the essential updates on enterprise AI, data, and security.

Liquid AI Unveils LFM2-VL

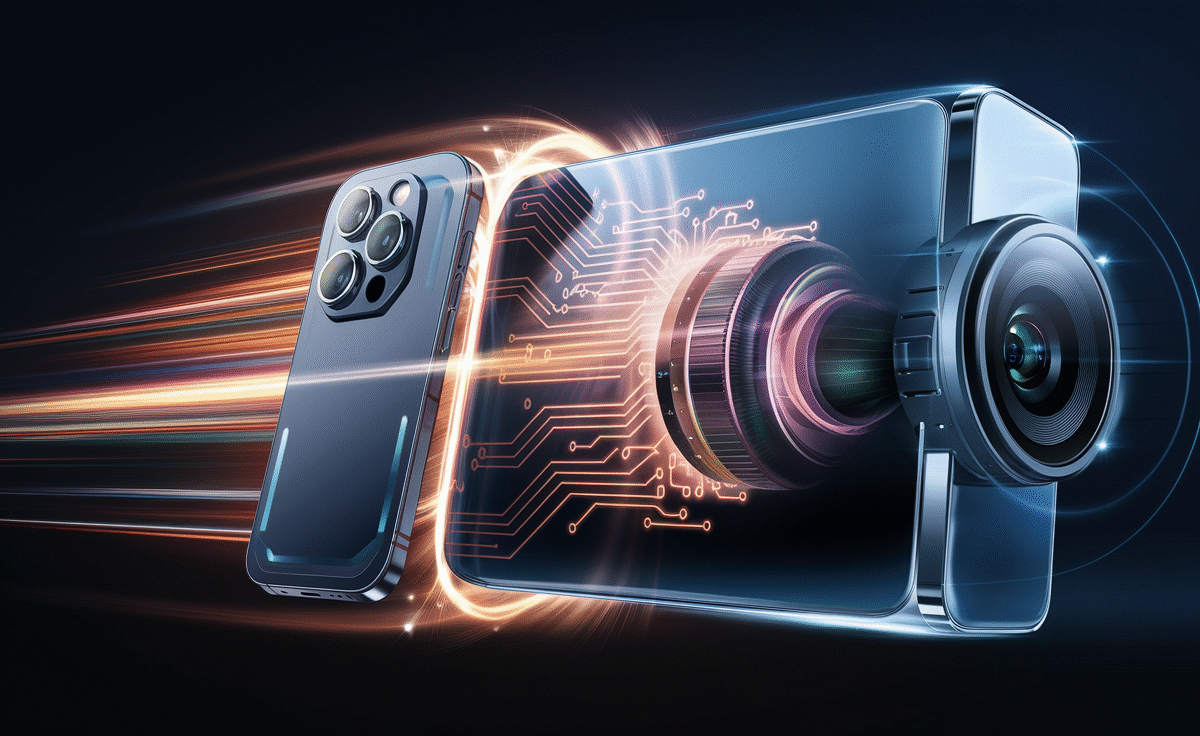

Liquid AI has introduced LFM2-VL, a next-generation vision-language foundation model designed for efficient deployment across a diverse range of hardware, including smartphones, laptops, wearables, and embedded systems. These models promise low-latency performance, high accuracy, and the flexibility needed for real-world applications. Building on the existing LFM2 architecture, LFM2-VL enhances multimodal processing capabilities, supporting both text and image inputs at varying resolutions. According to Liquid AI, these models deliver up to twice the GPU inference speed of similar vision-language models while maintaining competitive performance on standard benchmarks.

The Challenges of AI Scaling

Power limitations, increasing token costs, and inference delays are reshaping the landscape of enterprise AI. Join our exclusive salon to learn how top teams are:

– Transforming energy into a strategic advantage

– Designing efficient inference for real throughput gains

– Unlocking competitive ROI with sustainable AI systems

Reserve your spot to stay ahead: https://bit.ly/4mwGngO

Insights from Liquid AI Leadership

“Efficiency is our product,” stated Liquid AI co-founder and CEO Ramin Hasani in a post on X announcing the new model family. The LFM2-VL series features open weights, with models available in sizes of 440M and 1.6B parameters, achieving up to 2× faster performance on GPU while maintaining competitive accuracy. It supports native image processing at 512×512 pixels and employs smart patching techniques for larger images.

Model Variants

The release includes two model sizes:

– LFM2-VL-450M: A hyper-efficient model with under half a billion parameters, designed for highly resource-constrained environments.

– LFM2-VL-1.6B: A more capable model that remains lightweight enough for single-GPU and device-based deployment.

Both variants process images at native resolutions of up to 512×512 pixels, avoiding distortion or unnecessary upscaling. For larger images, the system applies non-overlapping patching and includes a thumbnail for global context, enabling the model to capture both intricate details and the broader scene.

The Vision Behind Liquid AI

Liquid AI was founded by former researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) with the mission of developing AI architectures that transcend the conventional transformer model. The company’s flagship innovation, the Liquid Foundation Models (LFMs), is based on principles from dynamical systems, signal processing, and numerical linear algebra. This results in general-purpose AI models capable of processing text, video, audio, time series, and other sequential data.

Unlike traditional architectures, Liquid’s approach aims to deliver competitive or superior performance while using significantly fewer computational resources. This allows for real-time adaptability during inference while keeping memory requirements low, making LFMs suitable for both large-scale enterprise applications and resource-limited edge deployments.

Launch of the Liquid Edge AI Platform (LEAP)

In July 2025, Liquid AI expanded its platform strategy by launching the Liquid Edge AI Platform (LEAP), a cross-platform SDK designed to facilitate the deployment of small language models on mobile and embedded devices. LEAP provides OS-agnostic support for iOS and Android, integrates with both Liquid’s own models and other open-source small language models (SLMs), and includes a library of models as small as 300MB, ideal for modern smartphones with limited RAM.

Its companion app, Apollo, enables developers to test models entirely offline, aligning with Liquid AI’s focus on privacy-preserving and low-latency AI solutions. Together, LEAP and Apollo demonstrate the company’s commitment to decentralizing AI execution, reducing reliance on cloud infrastructure, and empowering developers to create optimized, task-specific models for real-world applications.

Modular Architecture of LFM2-VL

LFM2-VL employs a modular architecture that combines a language model backbone, a SigLIP2 NaFlex vision encoder, and a multimodal projector. The projector features a two-layer MLP connector with pixel unshuffle, which reduces the number of image tokens and enhances throughput. Users can customize parameters such as the maximum number of image tokens or patches, allowing them to balance speed and quality based on their deployment needs.

The training process utilized approximately 100 billion multimodal tokens from open datasets and proprietary synthetic data. The models achieve competitive benchmark results across various vision-language evaluations. Notably, LFM2-VL-1.6B scores well in RealWorldQA (65.23), InfoVQA (58.68), and OCRBench (742), while maintaining strong performance in multimodal reasoning tasks. In inference testing, LFM2-VL achieved the fastest GPU processing times in its category when evaluated with a standard workload of a 1024×1024 image and short prompt.